Whenever a financial or technological disaster takes place, people wonder if it could have possibly been averted.

My guests today say that the answer is often yes, and that the lessons around why big disasters happen can teach us something about preventing catastrophes in our businesses and personal lives. Their names are Chris Clearfield and Andras Tilcsik, and they’re the authors of Meltdown: Why Our Systems Fail and What We Can Do About It.

We begin our discussion getting into how they got interested in exploring how everything from plane crashes to nuclear meltdowns to flash stock market crashes actually share common causes. We then discuss the difference between complicated and complex systems, why complex systems have weaknesses that make them vulnerable to failure, and how such complexity is on the rise in our modern, technological era. Along the way, Chris and Andras provide examples of complex systems that have crashed and burned, from the Three Mile Island nuclear reactor meltdown to a Starbucks social media campaign gone awry. We end our conversation digging into specific tactics engineers and organizations use to create stronger, more catastrophe-proof systems, and how regular folks can use these insights to help make their own lives run a bit more smoothly.

Show Highlights

- What is “tight coupling”? How can it lead to accidents?

- What’s the difference between a complicated system and a complex system?

- How has the internet added more complexity to our systems?

- Why trying to prevent meltdowns can backfire

- So how can organization cut through complexity to actually cut down on meltdowns?

- The way to build organizational culture to get people to admit mistakes

- Why we have a tendency to ignore small anomalies

- Is it really possible to predict meltdowns?

- The pre-mortem technique

- How to use some of these techniques in your personal life

- Why a diversity of viewpoints actually works, albeit counterintuitively

- Why you should seek out people who will tell you the truth, and even give you contradictory information

- How leaders can mitigate complexity and conflict

- What is “get-there-itis” and how does it contribute to disasters?

Resources/People/Articles Mentioned in Podcast

- Why Group Culture is So Important to Success

- Three Mile Island accident

- Deepwater Horizon

- Charles Perrow and his book Normal Accidents

- Delta Data Center Fire

- “Hackers Remotely Kill a Jeep on the Highway”

- “Starbucks Twitter Campaign Goes Horribly Wrong”

- What You Need to Know About the 737 Crashes

- Space Shuttle Columbia Disaster

- My interview with Nassim Nicholas Taleb

- Think Like a Poker Player to Make Better Decisions

Connect With Chris and Andras

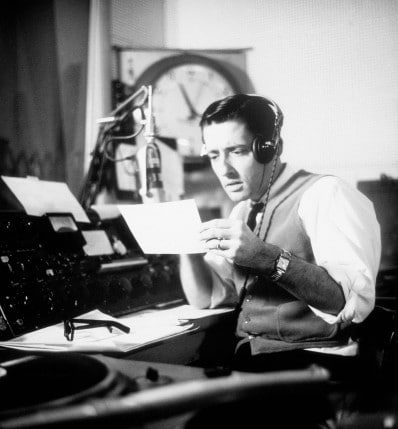

Listen to the Podcast! (And don’t forget to leave us a review!)

Listen to the episode on a separate page.

Subscribe to the podcast in the media player of your choice.

Recorded on ClearCast.io

Listen ad-free on Stitcher Premium; get a free month when you use code “manliness” at checkout.

Podcast Sponsors

GiveAGallon.com. One in six kids in the U.S. faces hunger, and that number goes up during the summer months when kids don’t have access to school meal programs. Go to GiveAGallon.com to donate to a food bank in your community.

Click here to see a full list of our podcast sponsors.

Read the Transcript

Brett McKay: Welcome to another edition of the Art of Manliness podcast. Whenever a financial or a technological disaster takes place, people wonder if it could’ve possibly been averted. My guests today say that the answer is often yes and that the lessons around why big disasters happen can teach us something about preventing catastrophes in our businesses and in our personal lives. Their names are Chris Clearfield and Andras Tilcsik. They’re the authors of Meltdown: Why Our Systems Fail and What We Can Do About It. We begin our discussion getting into how they got interested in exploring how everything from plane crashes to nuclear meltdowns to flash stock market crashes actually share common causes. We then discuss the difference between complicated and complex systems, why complex systems have weaknesses that make them vulnerable to failure and how such complexities are on the rise in our modern technological era. Along the way, Chris and Andras provide examples of complex systems that have crashed and burned from the Three Mile Island nuclear reactor meltdown to a Starbucks social media campaign gone awry. We end our conversation digging into specific tactics engineers and organizations use to create stronger, more catastrophe-proof systems and how regular folks can use these insights to help make their lives run a bit more smoothly. After the show’s over, check out our show notes at aom.is/meltdown. Chris and Andras join me now by Skype. Chris Clearfield, Andras Tilcsik, welcome to the show.

Chris Clearfield: Thank you so much.

Andras Tilcsik: Thanks.

Brett McKay: So, you two co-authored a book, Meltdown: What Plane Crashes, Oil Spills, and Dumb Business Decisions Can Teach Us About How to Succeed at Work and at Home, and I was telling you all before we started, I read this book while I was on an airplane reading about catastrophic plane crashes, which made me feel great. I’m curious. How did you two start working together on this idea of exploring catastrophic failures and what they can teach us about how to be successful in life?

Chris Clearfield: Well, I’ll tell maybe my half of kind of how I came to this work, and then, Andras, you can maybe pick up with sort of how we came together with it. I studied physics and biochemistry as an undergrad. So, I was always kind of a science geek, but then, I got intercepted and ended up working on Wall Street, and I ended up working there kind of during the heart of the 2007/2008 financial crisis. So, all around me, kind of the whole system of finance was struggling and collapsing, and I had this observation that, “Oh, I think that Bank X is going to do better than Bank Y in this process.” And then, I kind of took a step back and thought, “Well, that’s really interesting. I don’t work at either of these places. How could I possibly say something about the ability of these firms to manage risk just as an outsider, just based on kind of a really bare understanding of their culture.” And so, that made me just really interested in this question, sort of how organizations learn to manage failure and manage complexity, and at the same time, I was actually learning to fly airplanes. I’m a pilot. I’m an instructor now, but at the time, I was just starting to learn.

And I was kind of reading obsessively about why pilots crash plane, why well-trained flight crews make mistakes that mean that they make bad decisions, or they have these errors in judgment that cause them to, despite their best efforts, to kind of crash and do the wrong thing, and that, to me, was also the same kind of thing. It’s like the culture of a team mattered and the culture of an airline, where the kind of way people approach these challenging situations really mattered. And then, the third piece of the puzzle that made me realize what a widespread issue this was was when BP’s Deep Water Horizon oil rig exploded in the Gulf of Mexico, and just because I was interested, I started reading about that accident and realized that there was this kind of same set of sort of system and organizational factors at play in that accident. It was a complex system with a lot of different aspects to it, a lot of different things that had to be done correctly, and there wasn’t a great culture to support that. So, that made me realize what a widespread problem it was, but I was still thinking about it mostly from the systems angle, and then, Andras and I came together at some point and started thinking kind of more broadly about it together.

Andras Tilcsik: Yeah, and I came from a social science angle, and so, I was more interested in the organizational aspects of this, but in some ways, we started to converge around this idea that if you look at these different failures, the financial crisis, Deep Water Horizon, a plane crash, if you read enough of the literature and the accident reports on these, you start to see some of the same themes come up over and over again. And I felt that was fascinating, that there’s this sort of dark side to organizations, but it’s not unique to each failure. There’s some kind of fundamentals. And in some ways, we started to see that over time as this very positive message, potentially, in that while it implies that we are making the same dumb mistakes over and over again across different industries and in different life situations, if it’s the same things that get us into trouble across the situations, it also means that if we figure out a solution in some fields, we can try to apply those in other fields. And we can all learn from other, from other industries. So, it wasn’t just gloom and doom, and I think that really helped move this whole project forward.

Brett McKay: Well, let’s talk about … I think it’s interesting. You guys highlight this study, this scientific study of catastrophes, relatively new, and it started with this guy named Charles Perrow. Is that how you say his last name? Perrow?

Chris Clearfield: Yeah. Perrow.

Brett McKay: Perrow. Andras, I think this would be in your wheelhouse since he was a social psychologist. What was his contribution? What was his insight about catastrophic failures and why they occur on an organizational level?

Andras Tilcsik: Yeah. So, Charles Perrow or Chick Perrow as people more affectionately know him, he’s actually a sociologist, fascinating character. He’s in his early 90s now. He got into this whole field back in the late ’70s in the wake of the Three Mile Island partial meltdown, and his whole entry into this field was just so interesting to christen me when we were studying. For an outsider, he was studying very obscure organizational, sociological topics, and then, he ends up looking at Three Mile Island from this very organizational, sociological, social scientific perspective, rather than the standard, kind of hardcore engineering perspective. And in that process, he develops this big insight that the most fascinating things about Three Mile Island is that it’s this big meltdown, and yet, the causes of it are just so trivial, are through a combination of a bunch of small things, kind of like a plumbing error combined with some human oversight or problems with kind of human attention and sort of nothing out of the ordinary. It’s not like a big earthquake or a terrorist attack. Those little things, they just combine and bring down this whole system, and that really inspired Perrow at the time to kind of figure out what are the systems that we would see these types of failures, these types of meltdowns.

And that’s when he develops this interesting typology of systems, which really has two parts. One is complexity. The other is tight coupling, but complexity just means that the system has a lot of elaborately connected parts. So, it’s like an intricate lab and less like a linear assembly line type of process, and it’s not a very visibly system. You can’t just send a guy to the nuclear core and tell them, “Hey, take a look. Look at what’s going on in there, and come back, report back to me.” You have to, instead, rely on indirect indicators, and the other part of this typology that he comes up with is what he calls tight coupling, which really just means the lack of slack in the system. The system is unforgiving. It doesn’t really have a lot of margin for error, and he says that when a system has both of those components, then it’s especially ripe for these types of big surprising meltdowns that come from, not from a big external shock, but from little things coming together inside the system in surprising ways. And he says that’s because complexity tends to confused us. It tends to produce symptoms in a system that are hard to understand, hard to diagnose as they’re happening, and if the system was so it doesn’t have a lot of slack in it, if it’s tightly coupled, if it’s unforgiving, then it’s just going to run away with it.

It’s just going to run away with us. We’ll get confused, and we’ll be surprised, and it’s going to be a particularly nasty kind of surprise. You can’t easily control it. And in some ways, what we do in this book is that we build on pearls inside that came from this one particular accident at Three Mile Island, and we tried to extend and apply that inside to modern systems basically 40 years later, and we still see a lot of resonance of that very simple, yet deeply insightful framework.

Brett McKay: Well, let’s take this idea of complexity because you guys highlight in the book that something can be complicated but not complex. What’s the difference between the two things?

Chris Clearfield: I think that the complexity is a very specific way of thinking about the amount of connectedness in the system, the amount of kind of the way that the parts come together. So, a complicated system might be a system that has lots of different pieces or has lots of moving parts, like the kind of hardware in our phone, but most of those things, they interact in very stable and predictable ways. Whereas, a complex system, it has these interactions that are sort of important either by design or important kind of by accident. The Three Mile Island example is just one example of this but where you have these kind of parts of the system that end up being physically or kind of informationally close to each other that’s not intended by the designers. Delta, for example, had a fire in a data center which brought down their whole fleet of planes. I don’t mean made crash, but I mean grounded their whole fleet of planes. They couldn’t dispatch new flights. And so, you have this really unintentional connection between these different parts of the system that come from the way it’s built, that come from the complexity. So, I think complexity refers to the connections being more important than the components themselves.

Brett McKay: Okay. So, a complicated system, or yeah, a complicated system would be like the Rube Goldberg machine, right?

Chris Clearfield: Yes.

Brett McKay: Where there’s different little parts, but it all works in a linear fashion, and if one part’s messed up, you can figure out, you can replace that part, and it’ll keep going. You can keep things moving. With a complex system, it would be something like there’s a network effect, right, where parts that you don’t think are connected linearly start interacting, and it affects the whole system.

Chris Clearfield: Exactly. That’s a great way to put it.

Brett McKay: Okay.

Chris Clearfield: You should come with us on other podcasts, so you can help us with that.

Brett McKay: All right. So, that’s complexity. So, the other part of what causes catastrophic meltdowns is the tightly couple. So, what would be an example of a tightly coupled system, right, where there’s very little slack?

Andras Tilcsik: I think one neat way to think about it is to take one system and sort of think about when it is more or less tightly coupled. So, take an airplane, and you’re at the airport. You’re leaving the gate. It’s a relatively loosely coupled system at that point in the sense that there’s a lot of slack in it. There’s a lot of buffer. There’s a lot of time to address problems and figure out what’s going on if something bad or concerning happens, right? So, say you are the pilot. You notice something going on. You can go back to the gate. You can bring in other people. You can have a repair crew. You can go out, have a cup of coffee, think about what’s happening. Once you are in the air, it’s a lot more tightly coupled, and once you are, say, over the Atlantic, very far from any other alternative option, such as another airport where you could land, it’s extremely tightly coupled. There is no time to think. There is no time to get new parts. There is no other resources you can lean on, and you can’t just say, “Hey. This is a very complex, confusing situation. I’m going to step outside, have my coffee, come back after I’ve had some fresh air, and then solve it.” Things just happen fast in real time, and you just have to work with what you’ve got.

Brett McKay: And another example you give in the book, sort of like just a run-of-the-mill tightly coupled system is like if you’re cooking a meal, right, and you have time things just right so that the meal is all done at the same time, and if you miss one thing up, then you’re going to be 20, 30 minutes late with serving your meal.

Chris Clearfield: Right. Yeah. That’s a great one, and the other example that I like to think about that just crops up in my personal life when I have to travel for work is what does my flight look like. Do I have a direct flight from Seattle to San Francisco? That’s pretty simple, and there might be problems in the network, but by and large, if I show up for that flight on time, I’m going to get on the plane. It’s going to fly to San Francisco, and I’m going to be fine. But if I’m going to some place on the east coast from Seattle where I live, then, do I have to change planes in Chicago? Well, that adds a bit of complexity. Now, there’s kind of more parts of my trip where things can go wrong, and if I have two hours to change planes, well, that’s sort of a buffer. That’s relatively loosely coupled. If I have four hours, it’s super loosely couple, but if I have 45 minutes, suddenly, I’m in a really tightly coupled system where any delay caused by complexity is likely to be very disruptive to my whole trip, be very disruptive to my whole system.

Brett McKay: How has digital technology and the internet added more complexity and more coupling to our systems?

Chris Clearfield: There’s so many good examples of this, and I think this is one of the fascinating things for us about working on this book that when we started, we really expected to be working on the big … writing about big disasters, writing about Deep Water Horizon and Three Mile Island, and we definitely write about those things, but what struck us was how many more systems have moved into Perrow’s danger zone that are complex and tightly coupled. And there’s so many examples, but one of the ones that I love is our cars these days. So, I have a 2016 Subaru Outback. This is not … I mean, it’s a lovely car, but it’s not a fancy car, and yet, it has the ability to look up stock prices on the Infotainment system in the dashboard. And so, suddenly, you’ve taken this piece of technology that used to be relatively simple and relatively standalone, and now, many, many parts of the car are controlled by computers themselves, and that has very good reasons as to add features and increase the efficiency of how the engine works and all of this good stuff.

But now, suddenly, manufacturers are connecting these cars to the internet, and they are part of this global system. There’s this fascinating story in the book that was covered originally by a Wired reported called Andy Greenberg, and it is about these hackers that figure that they can, through the cellular network, hack into and remotely control Jeep Cherokees. And so, they’ve done all this research, and fortunately, the guys who did that were white hat hackers. They were hackers whose role was to find these security flaws and help manufacturers fix them, but that didn’t necessarily have to be the case. You suddenly have this example of complexity and tight coupling coming because manufacturers are now connecting cars to the internet. I mean, Teslas can be remotely updated overnight while it’s in your garage, and that adds great capabilities, but it also adds this real ability for something to go wrong either by accident or nefariously.

Brett McKay: But you also give other examples of the internet creating more complexity in sort of benign … well, not benign ways, but ways you typically don’t think of. So, you gave the example of Starbucks doing this whole Twitter campaign that had a social media aspect to it, and it completely backfired and bit them in the butt.

Andras Tilcsik: Yeah, totally. I think that was one of the early examples that actually caught our attention. This is a Starbucks marketing campaign, global marketing campaign around the holiday season where they created this hashtag, #SpreadTheCheer, and they wanted people to post photos and warm and fuzzy holiday messages with that hashtag. And then, they would post those messages on their social media as well as in come physical locations on large screens. In some ways, they created a very complex system, right? There were all these potential participants who could use the hashtag. They would be retweeting what they were saying, and then, there was another part to the system, which was also connected, is that the Starbucks tweets with the hashtag would be … these retweeted tweets and individuals tweets would be appearing in a physical location.

Chris Clearfield: And it was an ice skating rink, right? They showed up at a screen at an ice skating rink in London.

Andras Tilcsik: Yeah. So, in a very prominent location, there was a great Starbucks store there. Lots of people are watching it, and they start seeing these messages come up that are very positive initially, and people are tweeting about their favorite lattes and their gingerbread cookies and things like that, and then, all of a sudden, it turns out that some people decide to essentially hijack the campaign and start posting negative messages about Starbucks, critical messages about their labor practices, about their kind of tax avoidance scandals that they were caught up in at the time, especially in the UK. Starbucks, of course, thought about this possibility. They had a moderation filter put in place, but the moderation filter didn’t function for a little while, and talk about a tightly coupled system, right? Once the genie was out of the bottle, once people realized that anything they say with that hashtag will be shared both online and in these physical locations, then it was impossible to put the genie back into the bottle.

Andras Tilcsik: And again, even after they fixed the moderation filter, it was just too late. It was by that point, the hashtag was trending with all these negative messages. Then, traditional media started to pay attention to this funny thing happening on social media. So, there was another layer to that. Social media is connected to traditional media and back into people tweeting and Facebook sharing more and more about this. So, very quickly, essentially, a small oversight and a little glitch in the system that only lasted for a relatively short period of time turned into this big embarrassing PR fiasco that you couldn’t just undo, that was just spiraling and spiraling out of control.

Brett McKay: All right. So, the complexity was the social network. People act in unexpected ways. You can’t predict that, and the tight coupling was it was happening in real time. There’s nothing you can do about it really. Well, you also give examples of how technology has increased complexity and tight coupling in our economy, and you give examples of these flash crashes that happen in the stock market where, basically, there’ll be this giant crash of billions and billions of dollars, and then, a second later, a minute later, it goes back to normal.

Chris Clearfield: Yeah. It’s totally fascinating, and I think what’s fascinating about it is there are all these different … I mean, it’s very, very similar to social media in a way. There are all these different participants looking to see what everybody else is doing and kind of using the output of other people as the input to their own models and their own ways of behaviors. And so, you sometimes get these kind of critical events where the whole system starts moving in lockstep together and moves down very quickly and then kind of bounces back. One of the things that I think is interesting about is that regulators and policy makers, their mental model, generally speaking, of the stock market is it’s kind of like it used to be, just faster. In other words, it used to be that you had a bunch of guys on the floor of the New York Stock Exchange sort of shouting at each other, and that’s how trading happened and this sort of basic mental model, and it’s changing a little bit. But the basic mental model of regulators has been like, “Well, that’s still what’s happening except computers are doing the kind of interaction, and therefore, everything is faster.”

But really, the truth is that it’s a totally different system. The character of finance has totally changed. You also saw that in the financial crisis in ’07/’08, the way that the decisions that one back or institution made, because of all the interlinkages in finance, the way that those decisions really cascaded throughout the system. So, if one participant started looking shaky, then the next question was, well, who has exposure to these people and kind of how is that going to propagate through this system? So, that was the big worry with AIG that it wasn’t so much the failure of AIG that was critical that was hugely important. It was all of the people that had depended on AIG to kind of not only end the sort of insurance as we think about it traditionally, but all the financial institutions that had made these big trades with AIG, they did totally fascinating stuff.

Brett McKay: And it sounds like things are just going to get more complex as the whole thing … people are talking about now is introduction of 5G, which will allow us to connect cars, health devices, connect homes with smart things. Things are just going to get more and more complex.

Chris Clearfield: Yeah, and I think that kind of gets to the fundamental … I think the fundamental message of the book, which for us from our perspective is that this complexity, we can’t turn back the clock. I mean, I do tend to be skeptical of the cost and benefits of some of these tradeoffs, like my car being internet connected, but the truth is these systems in general, our world in general is going to get more complex and more tightly couples. The thing that we do have some control over is the things we can do to manage this, the things we can do to operate better in these systems, and I think for us, the key question was, at the heart of the book that we try to answer is, why can some organizations build teams that can thrive in these complex and uncertain environments while others really, really struggle? And so, that’s a lot of what the focus of the book is on. It’s on this kind of … the upside in a sense. How can you get the capabilities while being better able to manage the challenges?

Brett McKay: And it sounds like everyone needs to start becoming somewhat of an expert in this because systems that they interact with on a day-to-day basic are going to become more complex, and so, they need to understand how they can get the upside without getting the downside. So, let’s talk about that, some of the things you all uncovered. So, you started off talking about how trying to prevent meltdowns can often backfire and actually increase the chances of meltdowns happening, and you used the Deep Water Horizon explosion as an example of that. So, how can adding safety measures actually backfire on you?

Andras Tilcsik: Yeah. So, we see this at Deep Water. We see this in hospitals. I think we have seen this in aviation. We see this in all kinds of systems. It’s a very understandable, basic human tendency that when we encounter a problem or something seems to be failing, we want to add one more layer of safety. We add some more redundancy. We add a warning system. We add one more alarm system, more bells and whistles to the whole thing in the hopes that we’ll be able to prevent these things in the future. Often, however, especially in the systems that are already so complex to begin with, what ends up happening is that those alarms and those warning systems that we put in place, themselves add to the complexity of the system, in part because they become confusing and overwhelming at the moment. So, you mentioned Deep Water. I mean, one of the problems on the rig that day was that they had an extremely detailed protocol for how to deal with every single emergency that they could envision, but it was so detailed. It was so specific, so rigid that in the heat of the moment, it was pretty much impossible for people to figure out what is it that they should be doing.

In hospitals, a huge problem that we see all the time now is that there’re all sorts of alarms and warning systems built into hospitals to warn doctors and nurses about things that might be happening with patients or with medication doses or what have you. And at some point, people just start to tune out. If you hear an alarm or if you get an alarm on your company screen where you’re ordering medication every minute or, in some cases, every few seconds, at some point, you just have to ignore them. And then, it becomes very hard to separate the signal from the noise, and in our really, kind of well-intentioned efforts to add warning systems, we often end up just adding a ton of noise to these systems.

Brett McKay: And that was part of the issue that was going on with the airplane crashes we’ve had recently. I think Air France, there was issues where alarms were going off, and they were being ignored, or even on the recent Boeing ones, there was sort of false positives of what was going on, and the computer system overrode and caused the crash.

Chris Clearfield: Yeah. So, I mean, the 737 max crashes that we’ve tragically seen recently, I mean, are exactly this problem. It is Boeing, very well-intentioned, very thoughtful engineers at Boeing added a safety system to their airplane, and that makes sense, right, to first order. When you just kind of think about in isolation, adding a safety system should make things safer, but they didn’t properly account for are complexity that that safety system introduced. And so, what we see is we see they’re now dealing with the tragic and unintended consequences of that.

Brett McKay: So, what can organizations, individuals do to cut through all that complexity as much as they can to see. So, they can figure out what they need to focus on that can really make a difference in preventing meltdowns?

Andras Tilcsik: Well, one of the things that we find to be extremely important is to make an effort … And I think this applies to our individual lives as well as to running a big organization or a big system as well, is to make an effort to pick up on what we call weak signals of failure, on kind of near misses or just strange things that you see in your data or you experience in your daily life as you’re going around or running a system or trying to manage a team, something that’s unexplained, anomalies, and try to learn from that. This relates to complexity because while one of the hallmarks of, I think, the higher complex system is that you can’t just sit down in your armchair and imagine all of the crazy, unexpected ways in which it can fail. That’s just not possible almost by definition. What you can do on the other hand is you can, before these little things all come together into one big error chain, often what you see is a part of that error chain playing out, not all the way, but just a little part of it, so the system almost fails but doesn’t fail, or something strange starts to happen that could lead to something much worse.

If you catch it on time, you can learn from that, but I think what we see time and time again is that we have this tendency to treat those incidents, these small weak signals of failure as kind of confirmations that things are actually going pretty well, right? The system didn’t fail. Maybe it got close to it. Maybe it was just starting to fail, but it eventually didn’t fail. So, it’s safe. So, we have this temptation to conclude that while what we argue is that you’ll be in a much better position if you start to treat those incidents and those observations as data.

Brett McKay: Yeah. I think it’s an important point, the idea. Don’t focus on outcomes, right? Because everything can go right and be successful because of just dumb luck, but there’s that one time when those anomalies that popped up can actually … I mean, that’s the example, I guess, Columbia, the space shuttle, Columbia, is a perfect example of this where the engineers knew that those tiles were coming off, and it happened a lot. And the shuttles where able to successfully land safely. So, they figured, “Oh, it’s just something that happens. It’s par for the course.” But then, that one time in 2003, I mean, it ended up disintegrating the shuttle.

Chris Clearfield: Yeah. Exactly. We see this in our own lives, too. I mean, there’s sort of two examples I love. I think everybody who has a car has had the experience of the “check engine” light coming on, and the response being like, “God, I hope that thing goes off.” And then, it goes off later, and you’re like, “Okay. Good. I don’t have to worry about that anymore.” And yet, there is something that caused that light to come on in the first place, and you’re sort of kicking the can down the road a little bit. And with your car, not that that’s necessarily a bad strategy, but the more complex the system gets, I guess, the more you’re giving up by not attending to those kind of things. One example that actually happened to me right as we were working on the book was I had a toilet in my house that started running slowly, and I just kind of dealt with it for a while like, “Oh, yeah. That toilet’s running slowly. It always does that.” And then, eventually, it clogged, and it overflowed, and it was quite literally a shit show, but that’s because I didn’t attend to that kind of weak cue that there’s something here I should pay attention to.

Brett McKay: And how do you overcome that tendency? Is there anything from social psychology that we’ve got insights on how to overcome that tendency to ignore small anomalies?

Chris Clearfield: Yeah. I mean, one thing is you can just write them down, right? Write them down and share them with other people, and the other thing is you’ve got to be part of an organization, sort of thinking about it more from like a team culture perspective and a sort of work perspective. You’ve got to be part of an organization that’s willing to talk about mistakes and errors because so many of the way that these things happen comes out of mistakes and comes out of people who miss something maybe as a function of this system, but to learn from it, you’ve got to be willing to talk about it. So, we’ve been working with doing consulting work with a company, and one of the things we’re helping them do is we’re helping them sort of reimagine the way … This is a pretty big tech company, and we’re helping them reimagine the way that they learn from bugs that they’ve introduced in their software.

They have this postmortem process that they’ve been running for a long time, and they have a good culture around it, and we’re just helping them figure out how to push the learning out further in the organization and kind of help them make sure it is reinforcing a culture of blamelessness rather than blaming the person who introduced the bug or brought down the system or whatever it is. So, I think pushing the learning out as much as possible and reinforcing the culture of everybody’s expected to make mistakes. The important thing is you raise your hand and said, “I messed this up,” and then, everybody can learn from it.

Brett McKay: I think you all highlight organizations where people get rewarded for reporting their own mistakes. There’ll be some sort of reward ceremony like, “Hey, this guy messed up, but he figured out something we can do with that mess-up.”

Chris Clearfield: Yeah. The company, Etsy, who many of us know as this kind of great place that sells these handmade crafts is a really super sophisticated engineering organization behind it. They have 300, 400 engineers that are really doing cutting edge software stuff, and every year, they give out what they call the three-armed sweater award, which is an award for the engineer who broke their website in the most creative and unexpected way possible. So, that’s really an example of a culture kind of celebrating these kinds of problems through the learning and saying, “Yes, we know mistakes are going to happen. The important thing is we learn from them.”

Brett McKay: Okay. So, you can pay attention to anomalies, even if they’re small. So, if the “check engine” light comes on and goes off, take it to the dealership because there might be something bigger looming there. Create a culture where anomalies are … people bring those up willingly. They don’t try to hide it. Then, another part of preventing meltdowns is getting an idea of how likely they’re going to occur because a lot of these meltdowns that happen people are just like, “We didn’t see it coming. We had no idea this could happen.” Is it even possible, as systems get more and more complex, to even predict? Are these all going to be black swan events as Nassim Taleb says, or are there techniques we can use where we can hone in a bit and get an idea of how likely some meltdown is going to occur?

Andras Tilcsik: Yeah. So, I think that’s a great question. I think, again, prediction from your armchair is not possible, but there are two things you can do. One is, as we just discussed, you can start paying attention to these signals that are emerging and treat those as data rather than as confirmation that things are going just fine. And the other thing you can do is that there are now a bunch of techniques that social psychologists and others have developed to really try to get at the sort of nagging concerns that people are not necessarily bringing up, not necessarily articulating, maybe not even to themselves and certainly not to their team and to their manager. And we talk about a bunch of these in the book, but I’ll just highlight one that we both really like, is the pre-mortem technique, which essentially entails imagining that your project or your team or whatever high stakes thing you might be working on has failed miserably in six months from now or a year from now and then getting people in your team to sit down and kind of write a short little history on that failure.

And when you do this, you have to use a lot of past tense. You have to tell people, “Hey, this failure has happened. Our team has failed. Our project was a miserable failure,” without giving them any specifics, and then, turning things around and asking them to give you their kind of best set of reasons for why that might happen. And there is some fascinating research behind this technique that shows that when you ask this question in this way, people come up with much more specific reasons for why something might actually fail. They come up with a much broader set of reasons for why that failure might come about, and it also helps a lot with the social dynamics in a group. Often in an organization, we are rewarded for coming up with solutions. Here now, we are rewarding people at least by allowing them to be creative and talk about these things for articulating reasons why a project or a team or a business unit might fail.

And it really has this interesting cognitive and kind of social effect that really liberates people to talk about these issues, and it’s been shown to be vastly superior to the normal way that we usually do this, which is just purely brainstorming about risks, right? We do that all the time when we are running a project. We sit down, and we think, “Okay. What could go wrong? What are the risks here? Let’s think about that.” It turns out that’s not the right way to do it, and there is a right way to. There is a right way to do it.

Brett McKay: As I was listening to this, I can see this being applied just on people’s personal lives, bringing it back. If you were planning a vacation with your family, you’re sitting down with the Mrs. and be like, “All right. Our vacation was a complete failure. Why was it a complete failure?” Right?

Chris Clearfield: Totally. Totally.

Brett McKay: Or you’re planning a class reunion, right, and there’s all these moving parts. It’s like, “Okay. My class reunion was a complete bust. What happened?”

Chris Clearfield: Totally.

Andras Tilcsik: Yeah. Or say you are sitting down with your family and thinking about a home renovation project, and you know it’s going to take about two months, and you say, “Okay. Now let’s imagine it’s three or four months from now. The project was a disaster. We never want to see that contractor ever again. We really regret that we even started this whole thing. Now, everybody write down what went wrong.” Again, you use the past tense rather than asking what could go wrong, and yeah, I think it would really help people. Chris and I actually collected some data on this, and it really seems like people volunteer information that sort of bothers them, but they never really had an opportunity to bring up. And I think using that to collect data about risks is one of the most powerful predictive tools we have.

Chris Clearfield: And to your question, Brett, I think the vacation one is a great example, right? The output of this, it’s not like we’re not going to take the vacation, right? But maybe we are going to figure out, “Oh, in this part of the trip, there’s no activities for the kids to do. Maybe we need to figure out is there a playground near where we’re staying, or is there something fun for them to do? Maybe we need to, whatever, make sure the iPad is charged or bring a little nature kit so that they can go exploring with their magnifying glass.” Or whatever it is, it’s like the key is that this lets us raise issues, which we can then resolve in a creative way. It’s not like, “Well, we’re not going to take the vacation, or we’re not going to have the class reunion.”

Brett McKay: So, one of my favorite chapters in your book about how to mitigate the downsides of complexity and tightly couple systems is by increasing diversity on your team, your group, and diversity of viewpoints. Studies have shown that outcomes are better, more creative solutions are developed, et cetera. I’ve read that literature, and I’ve had people on the podcast that talk about the same thing, and I ask them, “What’s going on? Why?” And the answer they give is, “Well, it just does.” I never understood it because I always thought, “Well, you have a diverse group of people, but they all give equally bad ideas.” But I think your guys’s explanation of why diversity works is interesting because it makes counterintuitive sense, and it’s that diversity breeds distrust, and that actually helps us get better answers. Can you talk about that a bit?

Andras Tilcsik: Yeah. I think this is coming from some pretty recent research done really in the past sort of three to five years where psychologists are increasingly discovering this effect that in diverse groups, people tend to be a bit more skeptical. They tend to feel a little less comfortable, and if you compare those groups to homogeneous groups, there seems to be this effect that when you’re surrounded by people who look like you, you also tend to assume that they think like you, and as a result, you don’t work as hard to question them. You don’t work as hard to get them to explain their assumptions, and that just doesn’t happen as much in diverse teams. In diverse teams, people don’t tend to give each other the benefit of the doubt, at least in the cognitive sense, quite as much. So, you mentioned distrust, and I think that’s sort of an interesting way to think about it, but I would say that it’s distrust in a pretty specific sense. It’s not distrust in a kind of interpersonal sense. It’s not that I don’t trust you or that I don’t trust Chris to do the right thing or to want to have the same good outcomes that I want to have.

It’s more that I might be more skeptical about your interpretation of the world, or at least, I have some skepticism as to whether you buy my interpretation of the world, and this, of course, creates some friction. And friction can be healthy in a complex system. Of course, you don’t want people to disagree too easily. You want people to unearth assumptions and question each other. While if you’re running a very simple kind of operation, and it’s very execution oriented, there’s not a lot of strategic thinking, there’s not a lot of these black swan type of events you need to worry about, diversity is probably not particularly important, at least from a kind of effectiveness point of view. But if you’re running something that’s complex, and you really can’t afford people to fall in line too easily, and you really want some level of descent, what data show across types of diversity, whether it’s race or gender or even something like professional background is that diversity tends to make a very positive difference in those systems if it’s managed well.

Brett McKay: Yeah. Like you said, diversity doesn’t have to be based on race or gender or whatever. It can be profession. You give the example of the Challenger explosion. All the engineers thought nothing was a problem with the O-rings, right, which ended up causing the failure, but there was some guy, some account, basically, who saw that there was an issue, and he got ignored because all the engineers were like, “Well, you’re not an engineer like us. You’re not wearing the white shirt and tie and got the slide ruler. Go back to wherever you are.” And ended up being the O-ring causing the problem.

Andras Tilcsik: Yeah, and we see the same kind of effect in all kinds of areas. One set of results we write about in the book is about small banks in the US, community banks, right, your credit unions, your local banks, and we look at their boards. And it turns out when these banks have boards that have a good amount of professional diversity, so not only just bankers but also lawyers and journalists and doctors and sort of people from the community, there tends to be a lot more skepticism and disagreement on those boards, which doesn’t seem to make a big difference if these banks are doing very simple, straightforward things, just running a couple branches in a small town. But once they start to do more interesting, bigger, more complex things like expanding out of a county or getting engaged in sort of more complex, high stakes lending markets, then all of a sudden, having that kind of disagreement and skepticism and diversity on the board becomes really, really helpful. And it turns out that it immeasurably increases the chances that those banks will survive and even during something like the financial crisis.

Brett McKay: And how do you manage that skepticism so that it’s productive, and it doesn’t get in the way of getting things done?

Andras Tilcsik: So, I think, to a large extent, it’s a leadership challenge, sort of how you run that group, and I think instilling a culture where people understand that there’s distinction between interpersonal conflict and task conflict, so conflict about I don’t like the way Chris dresses and drinks his coffee and all that kind of stuff

Chris Clearfield: I drink my coffee just fine. Thank you.

Andras Tilcsik: I think your coffee is just fine … versus task conflict, which is I disagree with Chris’s interpretation of this particular event and sort of treating the first thing, the interpersonal stuff as something that we need to hammer out and get rid of by making sure that our team and organizational culture treats the second thing, the task conflict, as something that we need to celebrate, right? It’s great that we disagree because it’s data, because we have these different perspectives and these different little data points about this particular thing, and that forces us to state our assumptions. And I think as a manager or a director on one of these boards or even at home if you’re kind of thinking about this in a family context, managing disagreement in this way is really critical and making sure that the task conflict, the sort of cognitive conflict, that we do want to have doesn’t turn into interpersonal conflict, but rather gets treated basically as data about this complex system that we have to navigate, is really the way to go.

Chris Clearfield: And then, I’ll add something to that because when we work with leaders, especially when we work with senior leaders, I mean, there’s always a tension between … right? I mean, senior leaders got to be senior leaders because they were good at making decisions, good that their judgment, but there comes a time, particularly in a complex system, where they have to start to delegate more. Otherwise, they will be overwhelmed by the amount of data that they have to process. They will be overwhelmed by the … and they will not be able to predict the interactions between different parts of the system, right? That’s only something that somebody who is working down who is … if they’re a programmer programming every day, if they’re an engineer working directly on the engineering problem, only they will be able to kind of make the right call. And so, I actually think this ties into this decision making and diversity question in a real, tangible way. One of the things we help leaders do is sort of set up the kind of output parameters of, okay, what risks are they comfortable with their teams taking? What mistakes are they comfortable with people making?

And then, any decision that seems like it’s kind of in that ring fence really should be pushed down as low in the chain as possible, and any disagreement that seems … the campy result at a lower level should get escalated. But one of the things that does is that means that you don’t have a leader who is kind of stopping the decision making process when people actually have enough information, and even when people make a decision and make a mistake, you have a process in place that says, “Okay. Well, this isn’t the call I would’ve made. Here’s why my thinking is different in this case.” But because you’ve kind of, sort of put a ring around the types of risks that you’re willing to let people take, you can end up having really effective decision making that doesn’t put the firm or doesn’t put different parts of reputation or your reputation or a big project at risk. So, I think that part of the question about decision making, diversity just adds data, but many organizations struggle with how to make better decisions faster, and that comes from thinking about things a little more systematically from the get-go.

Brett McKay: That brings us back to how people can apply this to their, just, home life, their personal life. I imagine if you’re trying to make a decision that was a lot of complexity involved, those really tough decisions that people encounter every now and then with their life, talk to a whole variety of people. Have a sounding board you can go to, not just your friends who are going to say, “Oh, you’re great. Whatever you want to do is fine.” Actually look for those people who are going to tell you what’s what and give you contradictory feedback and have them explain their thinking.

Chris Clearfield: Yeah, totally, and I think one of the ways to do it, too, is to just structure your question to your friends very carefully. You can ask them in the form of a pre-mortem. You can say, “Hey, I want your help thinking through whether or not I should take this new job, whether or not I should move in with my family to St. Louis,” or whatever it is. Let’s imagine we do this, and it turns out, six months from now, I’m calling you and telling you this is a total disaster. What are the things that led to that? So, you can really use that technique to create skeptics in your community and create skeptics in your network, and as you said, go to outsiders. Go to people who are going to say, “No. This isn’t the right decision, and here is why.” That can be really helpful.

Brett McKay: Another sort of bias that we have that can cause us problems with complex, tightly coupled system, is this idea of get-there-itis, which is what you guys call it. What is that, and how does that contribute to disasters?

Chris Clearfield: Yeah. Get-there-itis, we’re not the ones who came up with that name. It’s actually, I feel like, an official term in the aviation accident literature. It is basically this phenomenon that happens to really highly trained pilots. As they get closer and closer to their destination, they get more and more fixated on landing at the intended point of landing. And so, they start to take information that sort of suggests that maybe there’s a problem, or maybe they should do something else, and they start to discard it, and this is kind of this natural human tendency that we all have. We look for data that confirms our opinion rather than what we should be doing, which is look for data that sort of disagrees with our opinion. But the get-there-itis, it happens when flight crews are flying to their destination airport, but it also happens to all of us when we’re working on a project, right? So, once a project is started, boy, is it hard to take a step back and say, “Actually, things have changed. This is no longer the right decision. This is no longer the right way to run our marketing campaign, or this is no longer the right piece of technology to include in this car, or this is no longer … We don’t even think there’s a market for this craft beer that we’re brewing anymore.”

People tend to, as the kind of pressure gets ramped up, people tend to just want to do more of the same thing and want to push forward more and more and more, and sometimes, that works out, and that kind of helps make it even more dangerous. But when it doesn’t work out, it can really blow up in our faces in really big ways, from actual airplane crashes to things like you can look at a Target’s expansion in Canada, which kind of ended in thousands of people being laid off, and I think seven billion dollars being written down from Target. So, that get-there-itis is really a part of pushing forward when we should be able to take a step back.

Brett McKay: It sounds like there’s some sunk cost fallacy going on there.

Chris Clearfield: Boom. Yeah.

Brett McKay: A lot of ego protection. People are like, “Well, I started this thing. I’m a smart person. So, when I decided, it must’ve been a good idea. If I say I’m not doing it anymore, it means I’m a dummy. That’s going to look bad. So, I’m just going to keep going.”

Chris Clearfield: Yeah. Totally. And I think that there’s so much there, and there’s a great story from … I think it’s in one of Andy Grove’s books, when he was at Intel, and they were sort of in the process of looking at the way that the market had really shifted around them, and he and the CEO at the time, I think, sort of asked themselves this question, “What decision would we make if we had just been fired, and then, they brought you and I back in from the outside, and we were looking at this with fresh eyes what decision we would make?” And so, being able to kind of shock yourself out of that sunk cost fallacy, being able to shock yourself out of that status quo bias is huge. And you mentioned ego, and I think one of the things that the research shows, and we see over and over again in our work is that the most effective organizations are the ones that start with a theory that they want to test, and then, test that theory and use that to kind of build what they’re going to do rather than starting with a theory that is kind of an implicit theory but that turns into, “This is what we’re going to do,” without really having any great data on how much that works.

Brett McKay: Well, Andras, Chris, it’s been a great conversation. Where can people go to learn more about the book and your work?

Chris Clearfield: So, you can find us in a bunch of places. We have a book website, rethinkrisk.net, which also has a short, two, three minute quiz that you can take to find out if you are heading for a meltdown in one of your projects or your situations. Twitter for our book is @RethinkRisk. I’m on Twitter, @ChrisClearfield, and then, my personal website is chrisclearfield.com.

Brett McKay: Well, guys, thank so much for your time. It’s been a pleasure.

Chris Clearfield: Brett, this has been awesome. Thank you.

Brett McKay: My guests today were Chris Clearfield and Andras Tilcsik. They’re the author of the book, Meltdown: Why Our Systems Fail and What We Can Do About It. It’s available on Amazon.com and bookstores everywhere. You can find out more information about their work at rethinkrisk.net. Also, check out our show notes at aom.is/meltdown, where you can find links to resources where you can delve deeper into this topic.

Well, that wraps up another edition to the AOM podcast. Check out our website at artofmanliness.com, where you can find our podcast archives. There’s over 500 episodes there as well as thousands of articles we’ve written over the years about personal finances, health and fitness, managing complex systems, mental models. You name it, we’ve got it, and if you’d like to hear ad-free new episodes of the Art of Manliness, you can do so on Stitcher Premium. For a free month of Stitcher Premium, sign up at stitcherpremium.com, and use promo code MANLINESS. After you’ve signed up, you can download the Stitcher app for iOS and Android and start enjoying ad-free Art of Manliness episodes. Again, stitcherpremium.com, promo code, MANLINESS. And if you haven’t done so already, I’d appreciate if you take one minute to give us a review on iTunes or Stitcher, whatever else you use to listen to your podcasts. It helps out a lot, and if you’ve done that already, thank you. Please consider sharing the show with a friend of family member who you think will get something out of it. As always, thank you for the continued support, and until next time, this is Brett McKay reminding you to not only listen to the AOM podcast, but put what you’ve heard into action.